Drift Free Navigation with Deep Explainable Features

/ 3 min read

Modern SLAM systems—especially those relying on LIDAR or visual input—struggle with localization drift in scenes with few salient features or in the presence of dynamic obstacles. This project, DRNDEF, builds a robust pipeline that tackles that issue head-on. We frame the problem not just as a control task, but as one of perception: if an autonomous vehicle could identify and actively move toward features that reduce SLAM drift, it could improve its localization accuracy significantly without sacrificing safety or efficiency.

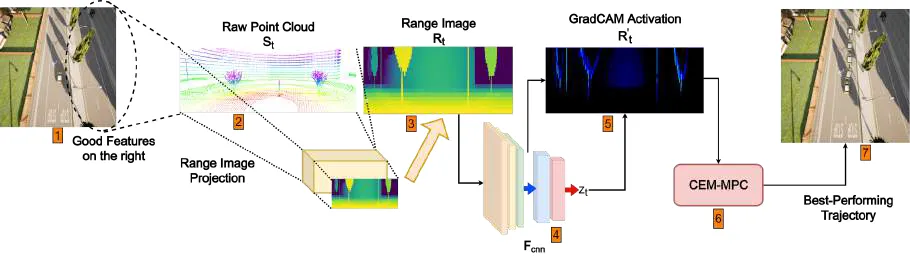

DRNDEF is a two-part system. First, a deep perception module observes LIDAR point clouds and identifies features that are good for localization (e.g., poles, tree trunks), using no explicit supervision. Then, a model-predictive control (MPC) planner generates feasible trajectories that steer the vehicle toward these “drift-reducing” features, while also accounting for dynamic obstacles and motion smoothness.

My main contributions were on the perception side. The LIDAR pointclouds were down-projected to 2D range images (where each pixel describes the depth of the point in the pointcloud). This was done with PyTorch DataLoaders to efficiently use the downprojections for training.

Then, I helped design a directional triplet ranking loss that trains a CNN to rank range images (2D projections of LIDAR scans) based on the drift they cause downstream in LOAM (a standard LIDAR SLAM system). This avoids the instability of directly regressing drift values and instead lets the model learn relative comparisons between nearly identical inputs. To make the output interpretable, we used GradCAM to extract activation maps, letting us visualize which parts of the scene the model thought were most useful for reducing drift. These maps became targets for the MPC planner.

On the planning side, we implemented a CEM-based MPC that samples hundreds of trajectories in parallel, scores them based on proximity to these features and standard motion cost, and updates its distribution in a receding-horizon loop. The planner was GPU-accelerated and written in JAX for speed.

I took charge of evalutions by running experiments in diverse CARLA scenes, both static and with moving traffic. Compared to LADFN (our previous RL-based approach), DRNDEF was faster, more stable, and more generalizable. It reduced localization drift by up to 76.76% with only minor increases (often <2%) in control cost. Importantly, it handled cases where semantic differences mattered—moving towards platforms and poles even when trees had higher edge density, something LADFN’s geometry-based heuristics couldn’t distinguish.

This project taught me a lot about how learned perception and classical control can work together, and how explainability tools like GradCAM can be more than just visual aids—they can become functional components in a control pipeline.