ORB-SLAM2 Implementation

Simulating Monocular ORB-SLAM2 using Rasbperry Pi

Acknowledgements

The project was inspired by Gautham Ponnu & Jacob George who implemented the same for their MEngg Design Project @ Cornell University. Find their project report here. This project was funded by SSN Research Center, completed in collaboration with my friends - Varshini Kannan and Shilpa J Rudhrapathy.

Objective

To simulate, test, and deploy monocular ORB-SLAM2 on a mobile robot using Raspberry Pi and Pi Camera V2.

Short Pipeline

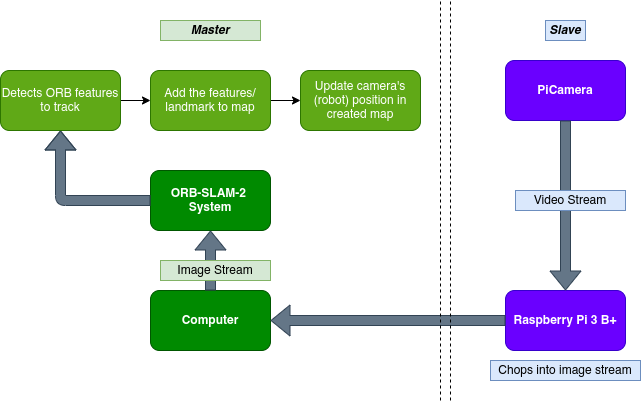

Master-Slave Pipeline for Deployment. A video stream from the Pi Camera is chopped into images at regular intervals by a Slave Raspberry Pi 3B+. These images are sent to a Master Computer that publishes the image feed as a ROS topic. This is read by ORB-SLAM2's system which performs the necessary mapping and localization.

Sample Results

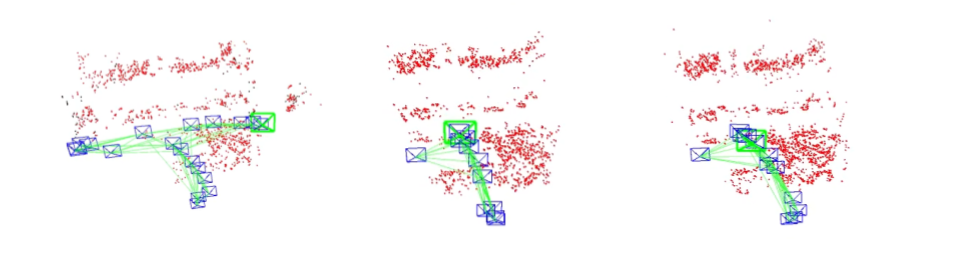

We tested some of the results by free hand moving the Pi Camera in an indoor setting i.e., a study desk. By increasing the number of sampled features, we obtained denser reconstructions of the desk with reduced localization error.

Results on sample indoor setting. Displayed from left to right: RViz windows of ORB-SLAM2 running on 1000, 1500, and 2000 sampled features respectively. Red dots represent detected ORB features, green lines show odometry of the ego-camera, blue envelopes show the pose of the ego-camera at equal time intervals.